Real

Real number generation using neural network classification.

Note: This is a personal project of my own; I believe/assume this is an original work and similar work has not been carried out by anyone else.

It seesm most people want to know what this used for; this is a comment I wrote in reddit

"I try to explain

with a little example say we need to

predict Partition coefficient of a chemical

molecule. A partition coefficient(P) or Log

P can take any real values both positive or

negative eg: Acetamide= -1.16. If we want

to predict such values for a chemical

molecule in neural network then we have to

use linear activation function as last

layer and need to train it in a regression

way, which may or may not succeed.

My idea is to avoid this step and predict

real numbers as binary classification with

a certain length. As a proof of my idea I

made a auto encoder which was able to get a

fair good but not the best predictions"

There are two ways, that I know of, to generate real numbers using neural networks and those are the regression and seq2seq approaches. From experience I can say that both regression and recurrent(seq2seq) methods are computationally heavy and I also assume the transformer(seq2seq) technique may also need more computation. In this project my goal was to generate real numbers using classification only approach with a simple neural network. The advantages of such method will be fast training and quick prediciton of outputs. An another novel application for this is building a neural network enabled calculator for deterministic real number operations.

Real to Vectors.

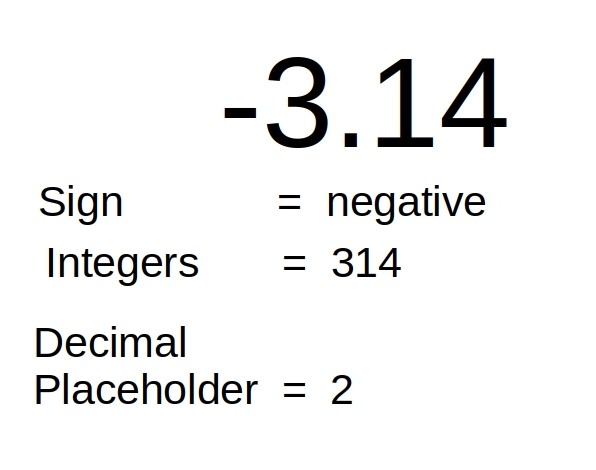

Real number in its real form cannot be used for a classification method. We need to convert real numbers to a binary vector form. First we need to separate real numbers into its three corresponding Sign, Integers and Decimal/Placeholder parts. An example for such breakup can be seen in the below image.

The sign determining value $k$ can be found by

For integers we need to first find the matching vector $\mathbf{i}$. The vector can then be transformed to a scalar $i$ by taking dot product with vector having list of values which are powers of 10. Here $d$ is the maximum integer length.

The decimal's placeholder value $p$ which determines where the decimal should be placed in the integers can be obtained by

In the end real numbers can be calculated using the formula.

Note: I am mediocre in mathematics, kindly forgive any errors

Neural Network.

A linear neural network was used for this project but any other varieties of neural network will also work. Because my primary aim was to validate my idea I had used a autoencoder model to generate real numbers. The autoencoder model has three input heads and three output heads.

-

Input Heads: Sign, Integers, Decimal

-

Output Heads: Sign, Integers, Decimal

Result

Real: [29.462 10.119 16. 54.483 81.462 77.

5.2 64.73 17.3 17.25 ]

Pred: [52.462, 52.119, 16.0, 92.483,

52.462, 77.0, 5.2, 64.73, 17.3, 17.25]

Loss: 0.7908857719954486

Real: [77.97 48.1 51.95 25.358 71.813 21.05

92.2 84.6 61.4 51.272]

Pred: [77.97, 48.1, 51.95,

49.358000000000004, 46.813, 21.05, 92.2,

84.60000000000001, 61.400000000000006,

29.272000000000002]

Loss: 0.27221214151122436

Real: [49.9 62.75 51.209 2.33 0.2 45.3

24.188 7.83 23.14 75.9 ]

Pred: [49.900000000000006, 62.75, 17.209,

2.33, 0.2, 45.300000000000004, 25.188,

7.83, 23.14, 75.9]

Loss: 0.35590826826738353

The outputs are very encouraging and neural

network was able to predict majoririty of

real numbers correctly. Loss function

corresponding to Sign and Decimal output

heads were optimized properly. But In my

option the stochasticity of generated real

numbers was due to the sub optimal loss

functions used for integers head. A much

more improved integer head loss function

might increase the accuracy of prediction.

Overfitting the model also helped to attain

good predicitons, adding dropouts

significantly affected the output results.

Project Github Page: https://github.com/Gananath/Real

Future Plans.

-

Improving the accuracy of prediction.

-

Building a neural network calculator with this idea.

-

Posible application includes all the works which needs real numbers eg, velocity prediction, drug likeness prediciton etc.